https://github.com/ptrmu/ros2_shared

GitHub - ptrmu/ros2_shared: Shared utilities for ros2

Shared utilities for ros2. Contribute to ptrmu/ros2_shared development by creating an account on GitHub.

github.com

https://github.com/GalBrandwine/tello_ros#installation

GitHub - GalBrandwine/tello_ros: C++ ROS2 driver for DJI Tello drones

C++ ROS2 driver for DJI Tello drones. Contribute to GalBrandwine/tello_ros development by creating an account on GitHub.

github.com

http://whatimade.today/ros2-helping-the-community/

ROS2 - Helping the community

Hey all, How are you doing these days? For the last couple of months, I've been learning and implementing all ROS2 index tutorials. ROS stands for Robotic Operation System. But it stands for a whole lot more! From a state of the art C++ implementations, th

www.whatimade.today

1. package install

source /opt/ros/foxy/setup.bash

mkdir -p tello_ws/src

cd tello_ws/src

git clone https://github.com/ptrmu/ros2_shared.git

git clone https://github.com/GalBrandwine/tello_ros.git

cd ..

apt-get install ros-foxy-gazebo-rospkgs

2. file edit

gedit src/tello_ros/tello_driver/src/video_socket.cpp#include "tello_driver_node.hpp" //이부분 .h에서 .hpp로 수정

#include <libavutil/frame.h>

#include <opencv2/highgui.hpp>

#include <camera_calibration_parsers/parse.hpp> //이 부분 parse.h 에서 parse.hpp로 수정

namespace tello_driver

{

// Notes on Tello video:

// -- frames are always 960x720.

// -- frames are split into UDP packets of length 1460.

// -- normal frames are ~10k, or about 8 UDP packets.

// -- keyframes are ~35k, or about 25 UDP packets.

// -- keyframes are always preceded by an 8-byte UDP packet and a 13-byte UDP packet -- markers?

// -- the h264 parser will consume the 8-byte packet, the 13-byte packet and the entire keyframe without

// generating a frame. Presumably the keyframe is stored in the parser and referenced later.

VideoSocket::VideoSocket(TelloDriverNode *driver, unsigned short video_port, const std::string &camera_info_path) :

TelloSocket(driver, video_port)

{

std::string camera_name;

if (camera_calibration_parsers::readCalibration(camera_info_path, camera_name, camera_info_msg_)) {

RCLCPP_INFO(driver_->get_logger(), "Parsed camera info for '%s'", camera_name.c_str());

} else {

RCLCPP_ERROR(driver_->get_logger(), "Cannot get camera info");

}

buffer_ = std::vector<unsigned char>(2048);

seq_buffer_ = std::vector<unsigned char>(65536);

listen();

}

// Process a video packet from the drone

void VideoSocket::process_packet(size_t r)

{

std::lock_guard<std::mutex> lock(mtx_);

receive_time_ = driver_->now();

if (!receiving_) {

// First packet

RCLCPP_INFO(driver_->get_logger(), "Receiving video");

receiving_ = true;

seq_buffer_next_ = 0;

seq_buffer_num_packets_ = 0;

}

if (seq_buffer_next_ + r >= seq_buffer_.size()) {

RCLCPP_ERROR(driver_->get_logger(), "Video buffer overflow, dropping sequence");

seq_buffer_next_ = 0;

seq_buffer_num_packets_ = 0;

return;

}

std::copy(buffer_.begin(), buffer_.begin() + r, seq_buffer_.begin() + seq_buffer_next_);

seq_buffer_next_ += r;

seq_buffer_num_packets_++;

// If the packet is < 1460 bytes then it's the last packet in the sequence

if (r < 1460) {

decode_frames();

seq_buffer_next_ = 0;

seq_buffer_num_packets_ = 0;

}

}

// Decode frames

void VideoSocket::decode_frames()

{

size_t next = 0;

try {

while (next < seq_buffer_next_) {

// Parse h264

ssize_t consumed = decoder_.parse(seq_buffer_.data() + next, seq_buffer_next_ - next);

// Is a frame available?

if (decoder_.is_frame_available()) {

// Decode the frame

const AVFrame &frame = decoder_.decode_frame();

// Convert pixels from YUV420P to BGR24

int size = converter_.predict_size(frame.width, frame.height);

unsigned char bgr24[size];

converter_.convert(frame, bgr24);

// Convert to cv::Mat

cv::Mat mat{frame.height, frame.width, CV_8UC3, bgr24};

// Display

cv::imshow("frame", mat);

cv::waitKey(1);

// Synchronize ROS messages

auto stamp = driver_->now();

if (driver_->count_subscribers(driver_->image_pub_->get_topic_name()) > 0) {

std_msgs::msg::Header header{};

header.frame_id = "camera_frame";

header.stamp = stamp;

cv_bridge::CvImage cv_image{header, sensor_msgs::image_encodings::BGR8, mat};

sensor_msgs::msg::Image sensor_image_msg;

cv_image.toImageMsg(sensor_image_msg);

driver_->image_pub_->publish(sensor_image_msg);

}

if (driver_->count_subscribers(driver_->camera_info_pub_->get_topic_name()) > 0) {

camera_info_msg_.header.stamp = stamp;

driver_->camera_info_pub_->publish(camera_info_msg_);

}

}

next += consumed;

}

}

catch (std::runtime_error e) {

RCLCPP_ERROR(driver_->get_logger(), e.what());

}

}

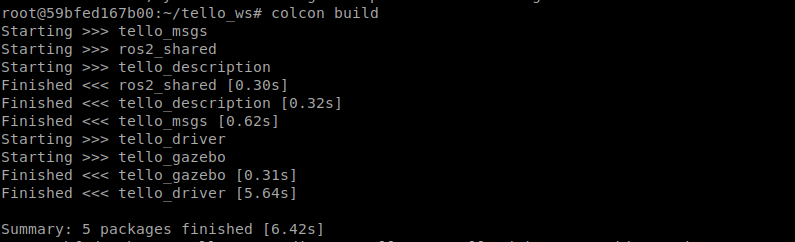

} // namespace tello_driver3. build

4.gazebo simulation

https://github.com/GalBrandwine/tello_ros/tree/master/tello_gazebo

apt install gazebo9-common

cd ~/tello_ros_ws

source install/setup.bash

export GAZEBO_MODEL_PATH=${PWD}/install/tello_gazebo/share/tello_gazebo/models

source /usr/share/gazebo/setup.sh

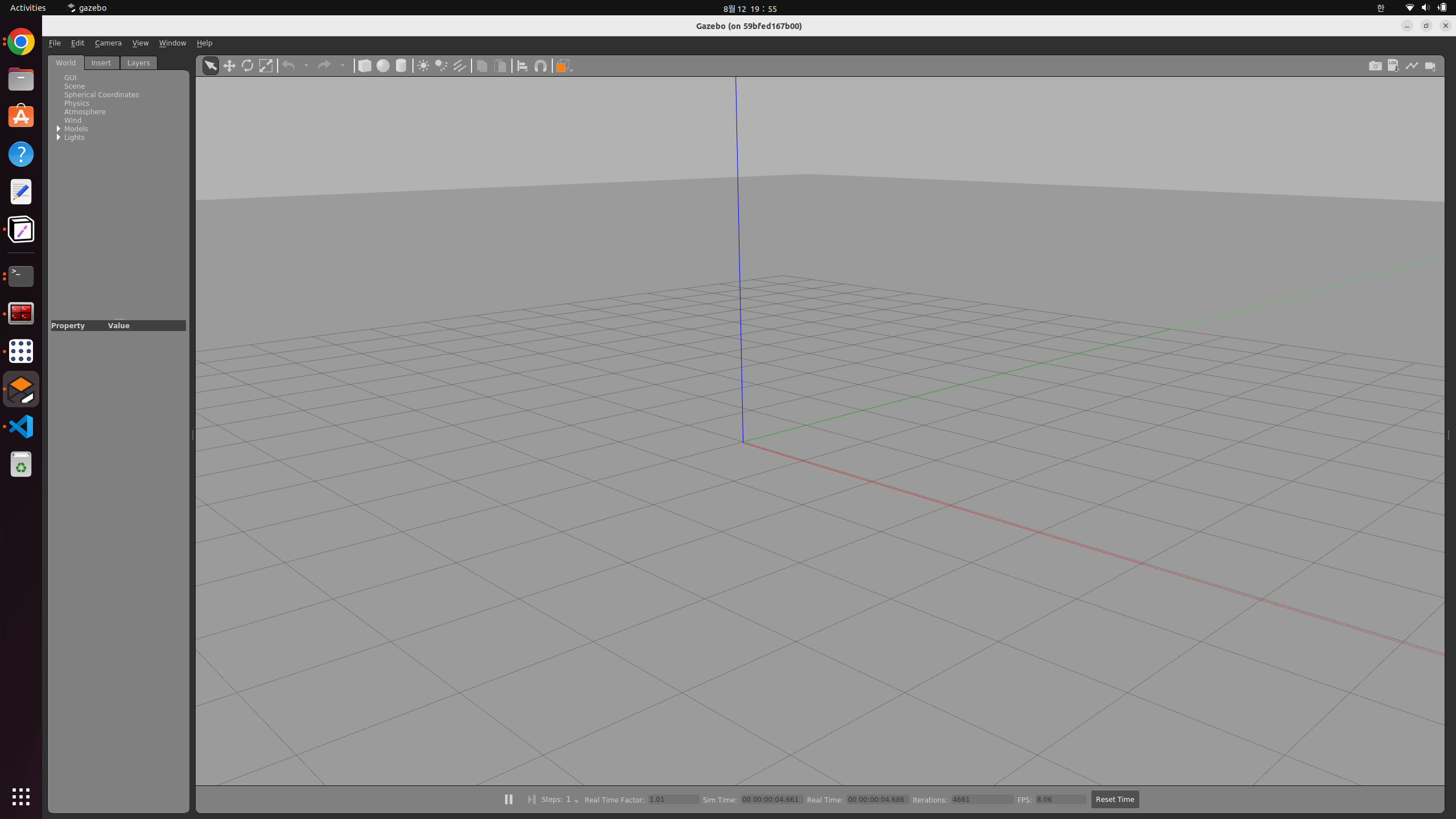

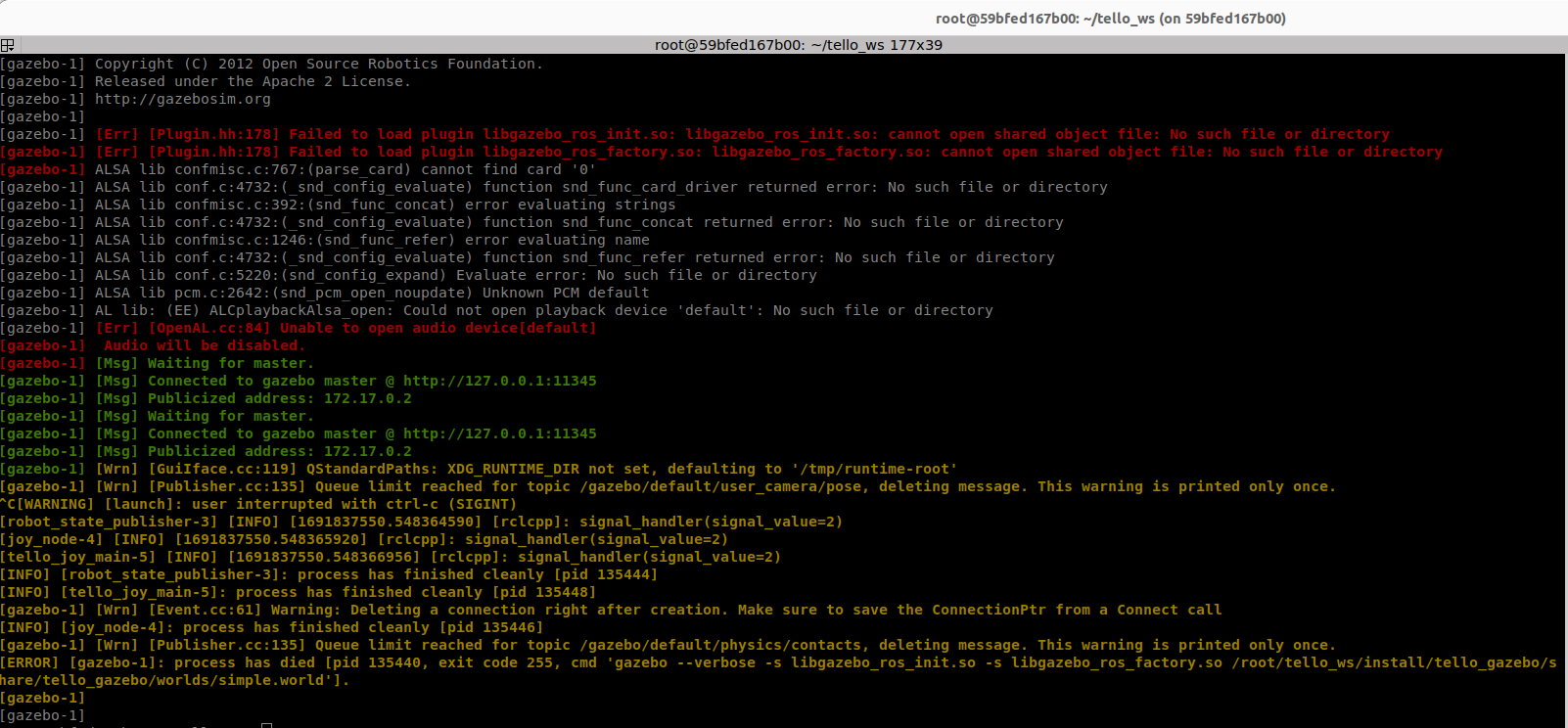

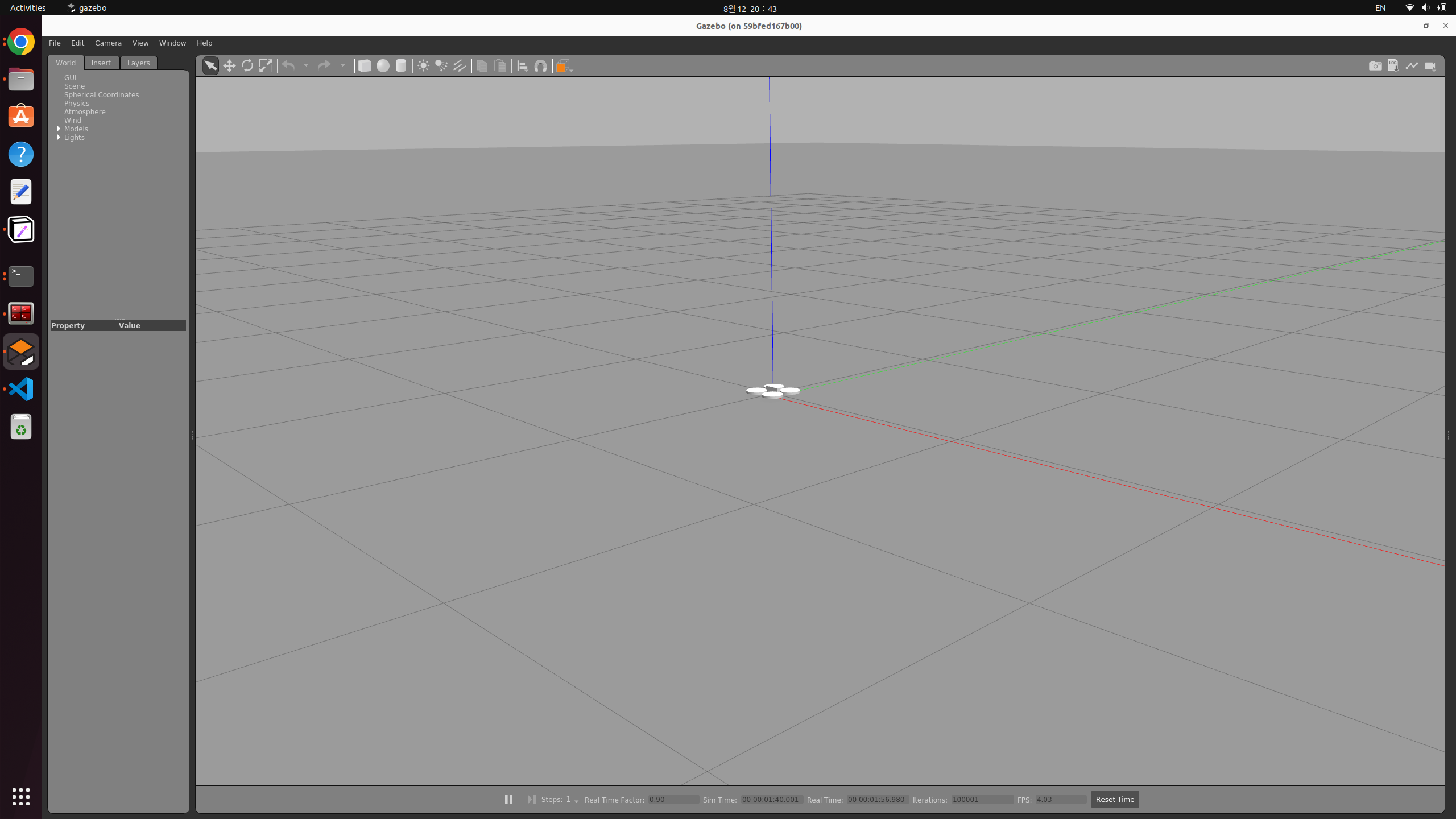

ros2 launch tello_gazebo simple_launch.py결과

드론이 뜨지 않고 에러가 많이 발생했다 해결해보자

Trouble shooting

file edit

1. simple_launch.py 파일 수정

urdf Spawn

방법 1 : /root/tello_ws/install/tello_gazebo/lib/tello_gazebo/transformations.py 추가

방법 2 : inject_entity.py에 quaternion from euler 메소드 추가

def quaternion_from_euler(roll, pitch, yaw):

"""

Converts euler roll, pitch, yaw to quaternion (w in last place)

quat = [x, y, z, w]

Bellow should be replaced when porting for ROS 2 Python tf_conversions is done.

"""

cy = math.cos(yaw * 0.5)

sy = math.sin(yaw * 0.5)

cp = math.cos(pitch * 0.5)

sp = math.sin(pitch * 0.5)

cr = math.cos(roll * 0.5)

sr = math.sin(roll * 0.5)

q = [0] * 4

q[0] = cy * cp * cr + sy * sp * sr

q[1] = cy * cp * sr - sy * sp * cr

q[2] = sy * cp * sr + cy * sp * cr

q[3] = sy * cp * cr - cy * sp * sr

return q

'Robotics 🤖 > Drone SW 🚁' 카테고리의 다른 글

| DJI Tello ROS kinetic 프로그래밍 환경 구축하기 (1) | 2023.08.14 |

|---|---|

| DJI Tello drone ROS2 시뮬레이션에서 구동하기(Gazebo) [3] (0) | 2023.08.13 |

| DJI Tello 프로그래밍을 위한 ros2 foxy 설치하기(docker) [1] (0) | 2023.08.12 |

| DJI Tello ros2 프로젝트 (0) | 2023.08.12 |

| DJI Tello 파이썬 프로그래밍하기 [1] (0) | 2023.08.04 |